This article is part of the article on work done in SPANN LAB, IIT Bombay(Prof U.B.Desai, Prof S.N.Merchant)

Wireless Sensor Networks (WSNs) have become one of the most interesting areas of research in the past few years primarily due to its large number of potential applications. WSN is an enabler technology, many believe that it can revolutionize ICT(Information and Communication Technologies), the way microprocessor revolutionized chip technology nearly 30 years ago. The proliferation in MicroElectro-Mechanical Systems (MEMS) technology has facilitated the development of smart sensors. These recent advances in Wireless Sensor Networks (WSN’s) have also lead to rapid development of real time applications. In this article we take a tour on how WSNs have evolved over the last decade with emphasis on the applications. In 2003, Technology Review from MIT, listed WSN on the top, among 10 emerging technologies that would impact our future.

The increasing interest in wireless sensor networks can be promptly understood simply by thinking about what they essentially are: a large number of self-powered small sensing nodes which gather information or detect special events and communicate in a wireless fashion, with the end goal of handing over their processed data to a base station. There are three main components in a WSN: the sensor, the processor, and the radio for wireless communication. Processor and Radio technology are reasonably mature. Nevertheless, cost is still a major consideration for large scale deployment. The inherent nature of WSNs makes them deployable in a variety of circumstances. They have the potential to be everywhere, on roads, in our homes and offices(smart homes), forests, battlefields, disaster struck areas, and even underwaters. This very pervasive nature leads us to “everyware” phenomenon of ubiquitous computing. Today, we have entered the third wireless revolution,”Internet of Things”. The third wave is utilizing wireless sense and control technology to bridge the gap between the physical world of humans and the virtual world of electronics. The dream is to automatically monitor and predict or respond to forest fires, avalanches, land slides, earthquake, hurricanes, traffic, hospitals and much more over wide areas and with thousands of sensors. It has come in reaching grasp due to the development of Wireless Sensor Networks (WSN) more oftenly called Ubiquitous Sensor Networks (USN). If we look back, a lot of work have been done in the field of protocols, collaborative information processing, dedicated OS like Tiny OS, dedicated database systems like Tiny DB, programming languages like nesC, 802.15.4 standardization in form of Zigbee, and large number of test deployments. Also, major initiatives in WSN R&D have been taken by MNCs like Microsoft(Project Genome), Intel(WISP), IBM(IBM Zurich Sensor System lab and Testbeds), SUN Microsystems(SPOT), etc.

Sensor networks provide endless opportunities, but at the same time pose formidable challenges, which include deployment, localization, self-organization, navigation and control, coverage, energy, maintenance, and data processing. The fact that energy is a scarce and usually non-renewable resource, power consumption is a central design consideration for wireless sensor networks whether they are powered using batteries or energy harvesters. However, recent advances in low power VLSI, embedded computing, communication hardware, and in general, the convergence of computing and communications, are making this emerging technology a reality in terms of processing, memory and energy. In general, WSNs are deployed using a non-renewable, but there have been applications like pervasive sensor environment(described later) which uses renewable source of energy, here, solar power. In many current projects, applications are executing on the bare hardware without a separate operating system component. Hence, at this stage of WSN technology it is not clear on which basis future middleware for WSN can typically be built. Another key challenge in WSN is the middleware. Middleware as the name suggests, sits right in between the operating system and the application. The main purpose of middleware for sensor networks is to support the development, maintenance, deployment, and execution of sensing-based applications. Currently, programmers deal with too many low levels details regarding sensing and node-to-node communication and the programming abstractions provided by middleware become a key aspect in its pursuit. Middleware also plays a very crucial role in leading to pervasive sensor systems. Ad-hoc sensor networks although related to WSNs but are very different in terms of energy supply, number of sensor nodes, computational capabilities, memory and global identification. In this article we limit ourselves to WSN.

Since the inception of “low power wireless integrated microsensors” in 1994, a DARPA funded research, WSNs have become a rage globally . In India, the technical institues were the first to take the onus of making contributions to this emerging field by doing a cutting-edge research which was soon to catch attention of government and public in solving real-world problems.

The major WSN projects undertaken in India were:

• DRDO (Defense Research and Development Organization) project on theoretical aspect, mote development and deployment (with IISc.)

• WSN for critical emergency applications like landslide predictions (Amrita University, IIT-Bombay, IIT-Delhi, IIT-Kgp)

• WSN for tracking and monitoring in underground mines (Central Mining Research Institute)

• Underwater wireless sensor networks (IIT-Bombay, Naval Physical & Oceanographic Laboratory)

• WSN for Agriculture (IISc, IIT-Bombay)

• Pollution monitoring (IIT-Delhi, IIM-Kolkata, IIT-Kgp, IIT-Bombay, IIT-Hyderabad)

• WSN for Biomed (IIT-Bombay)

• Many IITs also have WSN testbeds.

WSN are a great enabler for component manufacturers, system integrators, software services providers, OEMs, application developers and other end users.

WSN also started transiting from active academic research to industry. This emerging area has inspired many start-up companies like Virtual Electronics Company (Roorkee) , Dreamajax Technologies(Bangalore), Airbee Wireless (India), Virtualwire (Delhi), etc. and numerous small firms specialising in sensors. Big players like Infosys, TCS and Wipro also value this key technology. Infosys has a dedicated R&D labs Convergence and SETLabs, which pursues R&D in pervasive computing and wireless sensor networks. TCS Innovation Lab in Mumbai expertises in wireless technology (mKrishi). Wipro and Infosys have been an active partner in the pervasive sensor environment project under the umbrella of IU-ATC(Indo-UK Advance Technology Centre) a 9.2 million pound project.

Here we describe some important path-breaking WSN applications which present a great social and economic impact:

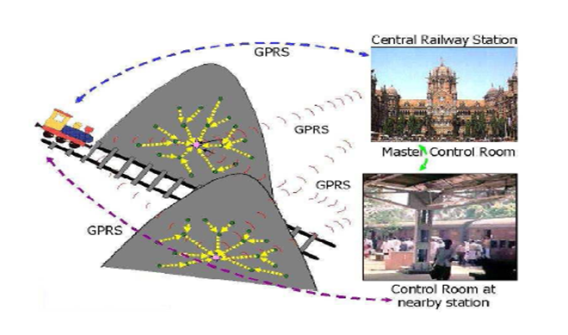

1. Senslide: Landslide Predictions

Senslide is a novel distributed sensor system for predicting landslides. The idea of predicting landslides using wireless sensor networks was originally conceived at the SPANN Lab in the EE Department IIT Bombay. It arose out of a need to mitigate the damage caused by landslides to human lives and to the railway network in the hilly regions of Western India. With support from Microsoft Research India and collaboration from the Earth Science Department IIT Bombay, Senslide became a joint research project among these three research groups. Having an inter-disciplinary team with expertise in each area has been invaluable in coming up with a solution. The system uses a combination of techniques from Earth Sciences, signal processing, and distributed systems and fault-tolerance.

A unique feature of the design is that it combines several distributed systems techniques to deal with the complexities of a distributed sensor network environment where connectivity is poor and power budgets are very constrained, while satisfying real-world requirements of safety. Senslide uses an array of inexpensive single-axis strain gauges connected to cheap nodes (specifically, TelosB motes), each with a CPU, battery, and a wireless transmitter. These sensors make point measurements at various parts of a rock, but make no attempt at measuring the relative motion between rocks. The strategy is based on the simple observation that rock slides occur because of increased strain in the rocks. Thus, by measuring the cause of the landslide, one can predict landslides as easily as if one would be measuring the incipient relative movement of rocks.

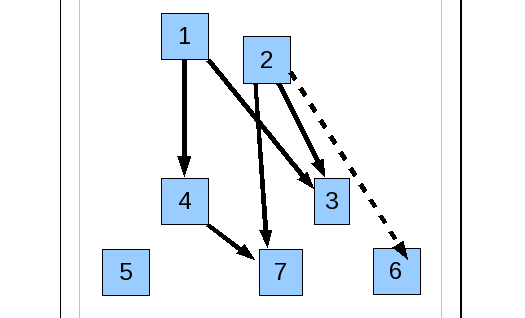

Figure 1: Senslide, Landslide Prediction

2. Landslide Predictions

Landslide predictions have been carried out in full pursuit at IIT-Bombay and Amrita Center for Wireless Networks and Applications(Amrita-WNA). Known for its successful completion of the Indo-European WINSOC Project, Amrita-WNA is recognized worldwide today for its deployment of the first-ever wireless sensor network system for predicting landslides. The system uses wireless sensor technology to provide advance warning of an impending landslide disaster, facilitating evacuation and disaster management. The Government of India has shown interest to deploy this system in all landslide prone areas including the Himalayas and the Konkan Region. India’s first ever cutting edge wireless sensor network system, designed to detect landslides at least 24 hours ahead of its occurrence, has been set up at Munnar in the high range Idukki district of Kerala where eight persons had lost their lives in 2005 landslides.

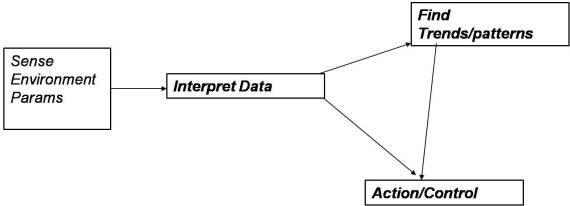

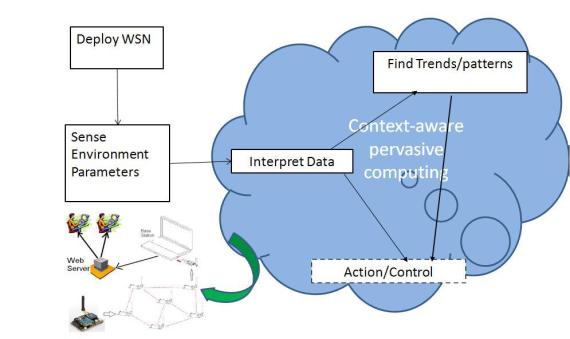

3. Pervasive Sensor Environment

Pervasive Sensor Environment is an ongoing active project for environment monitoring under the umbrella of IU-ATC funding by Department of Science and Technology, Government of India. This application is of significant social and economic interest and is envisioned to be able to support a diverse range of sensor-network based services across a scalable infrastructure. Its broad aims are:

• Distributed pollution level measurement.

Sensing elements placed along roads, in buildings and in watercourses will provide information about both atmospheric pollution and the threats to health from contaminants in materials that are vital to health. This links directly to WHO and Global Health initiatives of the UN.

• Traffic monitoring.

Traffic levels are set to grow, and both the nature of the movement patterns experienced by cars and the quality of the roads are of importance to both drivers and the authorities associated with road planning and maintenance.

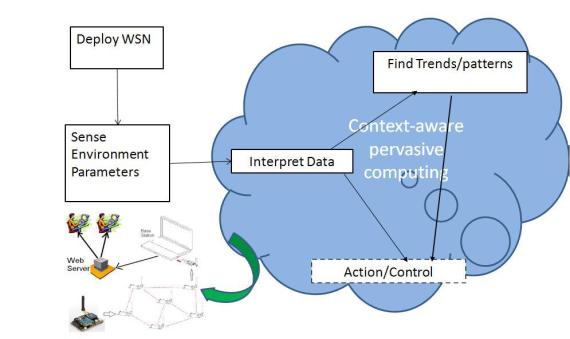

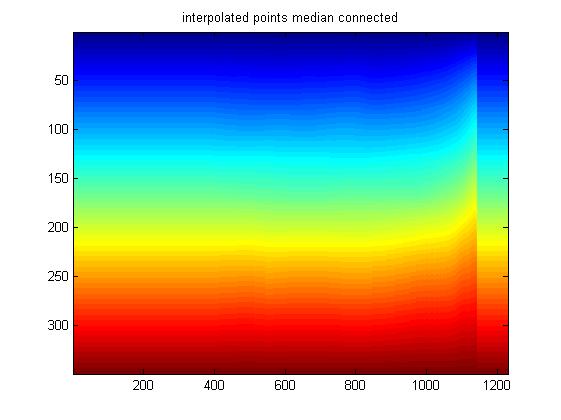

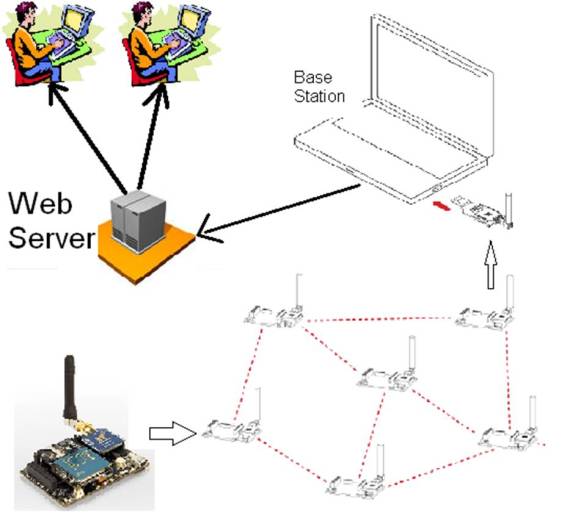

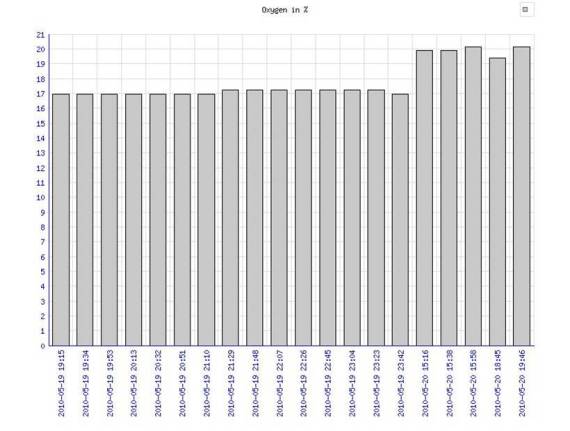

In pursuit of achieving the above desired goals, it also tries to solve other intermittent problems like low cost mote development, scalable network protocols with power-aware paradigm, middleware development, Data warehousing for the scientific pollution repository, and a context-framework for context-based pervasive computing. The real-time environment monitoring has been carried out using Libelium Waspmotes on a smaller scale in Hyderabad and Mumbai city. The motes are equipped with solar panel, GPS, gas sensors namely, CO2, O2, NO2 and CO, and other sensors namely, temperature, humidity and air pressure. Considering the full scale deployment cost, low cost motes are being developed at IIT-Hyderabad which challenges the pricing of the motes present in market by 1/10th.The data warehouse in IIT-Bombay has been developed using Microsoft SQL Server 2005 including SSAS and SSIS. The data insights are obtained using various SSAS built-in data mining algorithms like association rule mining, decision tree analysis, and clustering. Ambient Air Quality Index (AQI) standardizations are also refined in the process.

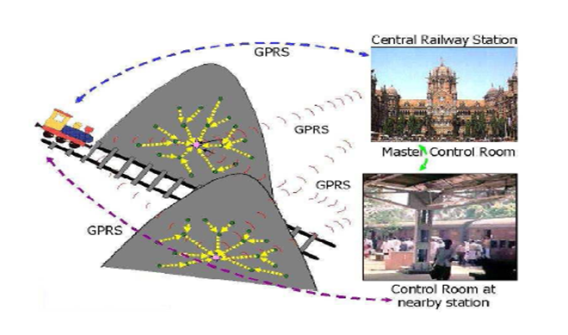

Figure 2: Pervasive Sensor Environment, Sensor Deployment in Bombay

Figure 3: Pervasive Sensor Environment, Ongoing Work

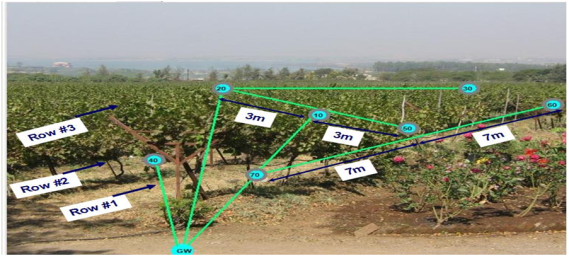

4. AgriSens: WSN for Precision Agriculture

AgriSens has been a revolutionary Agro-based WSN conceptualized and developed in IIT-Bombay. This project had been funded by Department of Information Technology, Government of India. This application is a revolution in the sense that it overcomes the technical and social challenges of precision farming systems in India using WSN. The agriculture system is considered to be a complex interaction of seed, soil, water, fertilizer and pesticides. Exploitation of agricultural resources to bridge the gap in supply/demand is leading to the resource degradation and subsequent decline in crop yields. This calls for optimal utilization of the resources for managing the agricultural system. Precision farming, an information and technology based farm management system, is to identify, analyze and manage variability within field for optimum profitability, sustainability and protection of the resources. WSN one among many technologies i.e. (Remote Sensing, Global Positioning System (GPS) and Geographical Information System (GIS)) practiced for precision farming, is found to be suitable for collecting the real time data of different parameters pertaining to weather, crop and soil and thereby helpful in developing solutions for majority of the agricultural processes related to application of water, fertilizer, pesticides etc.

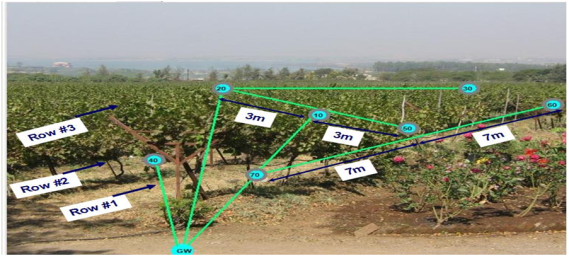

A case study built through a 2 year research project on deployment of WSN in green house at pilot scale and at grapevine in NASHIK district (India) has enabled testing its robustness and permitting to evaluate the input requirement i.e. water requirement and need of pesticides. Initial deployment of AgriSens was done at green house, IIT Bombay mainly for testing the ruggedness of the system for crops grown under controlled conditions. Once the technical feasibility established, it was extended to larger scale in vineyard. An embedded gateway base station performed elementary data aggregation and transmitted the sensory data to Agri-information server via GPRS. The server was situated at the SPANN Lab, Department of Electrical Engineering, IIT-Bombay i.e. about 200 km away from the field. The server also supported a real time updated web-interface giving details about the measured agri-parameters. The infection index was estimated using already existing semi-empirical models i.e. Logistic and Generalized Beta models for prediction of powdery mildew in Grapes. The outputs of the models were “infection index value” and the “timing of the different infection events”. It was observed that high risk of infection was corroborated with almost 11 ± 1 hrs of wetness duration with the average ambient temperature of 25 degree Celsius.

Figure 4: AgriSens, Sensor deployment at Sule Vineyard, NASHIK(India)

5. Body Area Networks.

The rapid growth in physiological sensors, low power integrated circuits and wireless communication has enabled a new generation of wireless sensor networks. These wireless sensor networks are used to monitor traffic, crops, infrastructure and health. The body area network field is an interdisciplinary area which could allow inexpensive and continuous health monitoring with real-time updates of medical records via Internet. A number of intelligent physiological sensors can be integrated into a wearable wireless body area network, which can be used for computer assisted rehabilitation or early detection of medical conditions.

At IIT-Bombay, physiological body signals are extracted via sensors fabricated of textiles, which are integrated with the attire of the subject. These sensors transmit the signal through specially formulated conductive fabric fibers, which act as conducting wires in our WBAN Architecture to an On Body Transmission Circuit (OBTC). The multiple sensors are interconnected the OBTC based on the applications opted by the user and the power requirement of the WBAN. The OBTC steps up the signal to the UWB operating frequency in range of 3.1 to 10.6 GHz and transmits it to On Body textile UWB antenna. The OBTC is also innovatively designed to power the entire system, by absorbing heat emitted by the subject body. Incorporation of thermoelectric generators using body heat typically shows a drop in generated power when the ambient temperature is in range of the body temperature. The connections between the OBTC and Textile Antennas are also made up of same conductive fibers that connect the sensors to OBTC. An ideal testbed is set up for subject monitoring devoid of any metallic components with the sensor integrated with the textile body patch. The UWB Textile antennas on the body send or receive signals through smart phones. The transmitted signals are received at the remote health monitoring station. This approach would allow one to assess quality of movement in the home and community settings and feed this information back into the clinical decision process to optimize the rehabilitation intervention on an individual basis. With the Machine Learning tools the engine provides an automatic diagnostic system.

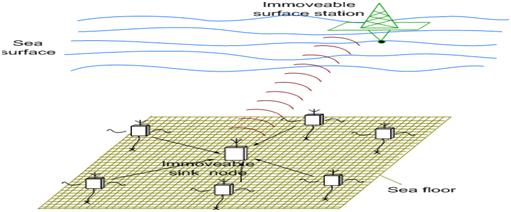

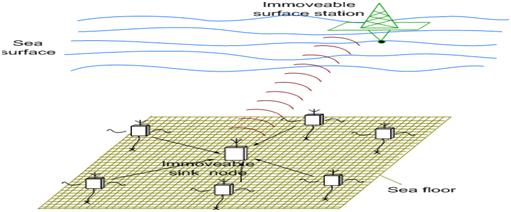

6. Underwater Sensors

Underwater Sensor Networks (UWSN) although have potential for broad range of applications like pollution monitoring, assisted navigation, disaster prevention, seismic monitoring, etc. but the challenges are even more daunting. The UWSN exploration at IIT-Bombay in collaboration with Naval Physical & Oceanographic Laboratory(NPOL) came up with a novel approach to overcome the challenges by proposing Energy Optimized Path Unaware layered routing protocol (PULRP). PULRP is an on the fly distributed algorithm, so mobility and loss of connectivity due to multipath are taken care of along with localization and time synchronization . PULRP considers energy parameter for potential relay node selection and is an energy optimized algorithm. IIT Bombay also has testbed for UWSNs .

Figure 5: UWSN, Network Architecture

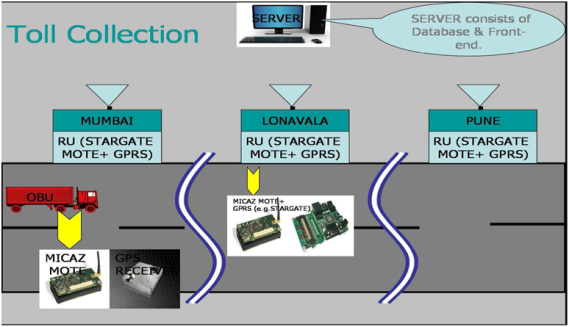

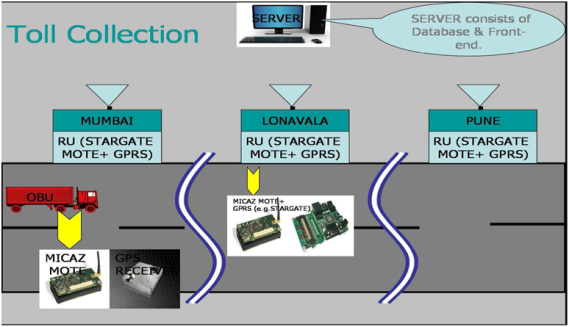

7. Electronic Toll Collection

Electronic Toll Collection System is combination of techniques and technologies that allows vehicles to pass through a toll facility without requiring any action by driver (i.e. stopping at the toll plazas to pay cash). This project had been funded by Department of Information Technology, Government of India. ETC starts with the capability to detect the presence of a vehicle. Based on such detection, vehicles are classified in different categories. The information of vehicle classification is used to deduct the tolling amount from the account of user. Electronic Toll Collection System consists of three components Automatic Vehicle identification system, Automatic Vehicle Classification System, and Enforcement system. Wireless communication, sensors and a computerized system uniquely identifies each vehicle to electronically collect the toll. Important aspect of this architecture is it requires very less infrastructure. Electronic Toll Collection is implemented using Motes for On-board Unit and Roadside Unit programmed with Tiny OS, and server programmed using SQL. Implemented Electronic Toll Collection using MARWELL USB 0800 Cards for Onboard Unit, Road-side Unit, and Server, Communication between these units was established using UDP Socket Programming. Server Uses SQL for toll collection.

Figure 6: Electronic Toll Collection, Architecture

Conclusion:

WSNs are biggest proponents with regard to the third wave in computing. i.e.; Ubiquitous computing and it’s subsuming. First were mainframes, each shared by lots of people. Now we are in the fading end of personal computing era, person and machine staring uneasily at each other across the desktop. Ubiquitous computing has just entered, or the age of calm technology, when technology recedes into the background of our lives. WSNs have indeed made a huge social and economic impact and the exploration by the technical institutes especially IIT -Bombay had been more than worth in creating real-time applications and testbeds all along the way.

19.133636

72.915358